OpenAI Integration Guide

This guide will cover how to integrate OpenAI's powerful AI capabilities directly into your Cue flows. You can use OpenAI for chat completions, function calling, and structured responses to enhance your customer service automation across WhatsApp, WebChat, and Messenger channels.

| Functionality | Possible | Notes |

|---|---|---|

| Create a function call | ✅ | using chatbot flows |

| Create a web search response | ✅ | using chatbot flows |

| Create an image response | ✅ | using chatbot flows |

Overview

- Requirements for integration

- Setting up OpenAI API access

- Chat Completion integration

- Function Calling integration

Requirements for integration

- A Cue account with access to manage flows

- An OpenAI API account with valid API key

- Understanding of JSON formatting for API requests

- Basic knowledge of Cue's Flow Builder and HTTP request nodes

Setting up OpenAI API access

Before integrating with Cue, you'll need to set up your OpenAI API access:

- Sign up for an OpenAI account at platform.openai.com

- Navigate to the API Keys section

- Create a new API key and copy it securely

- Add credits to your account for API usage

Important: Keep your API key secure and never expose it in client-side code or public repositories.

Chat Completion Integration

In this section we’ll show how to use a simple Chat Completion to summarize the entire bot-to-customer conversation at the end of a Cue flow, then hand it off—either to a live agent or to an external system (CRM, ticketing, etc.).

When to use Chat Completion

- Summarize a multi-step chatbot interaction for a human agent

- Generate a plain-text recap for logging or archival

Stringify the Conversation

- Open your flow in the Cue Flow Builder

- Add a Function step

- Configure the Input

{"conversation":"{{session.conversation.plainText}}"} - Save result as:

conversationString - Code:

module.exports = function main(input) {

const convoString = JSON.stringify(input.conversation)

return convoString;

}

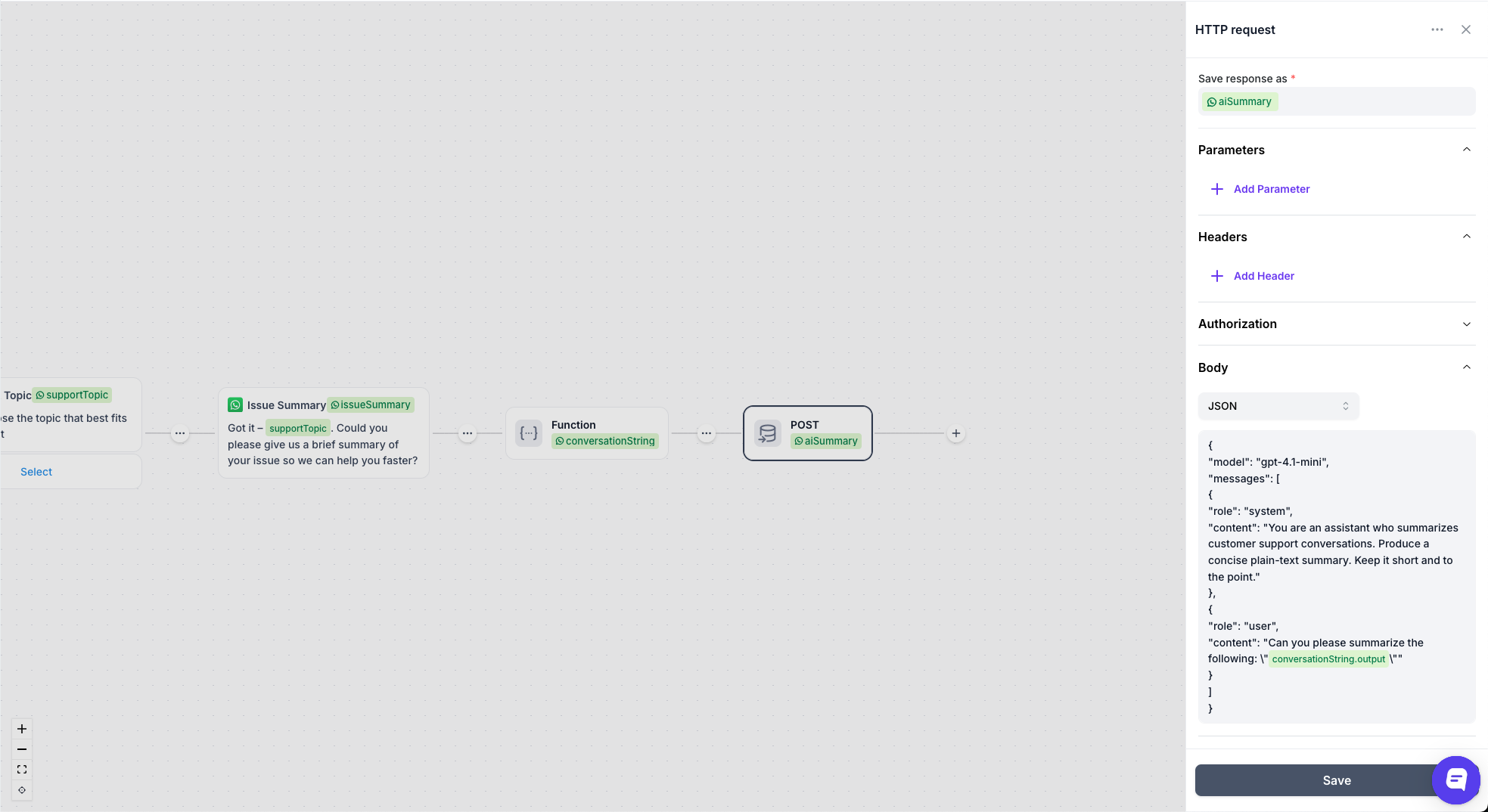

Basic HTTP Setup

- Open your flow in the Cue Flow Builder

- Add an HTTP request step where you want the summarisation to occur.

- Save response as:

aiSummary - Configure the HTTP request with the following settings:

- URL:

https://api.openai.com/v1/chat/completions - Method:

POST - Authorization:

Bearer Token - Token:

ExampleToken - Body:

{

"model": "gpt-4.1-mini",

"messages": [

{

"role": "system",

"content": "You are an assistant who summarizes customer support conversations. Produce a concise plain-text summary. Keep it short and to the point."

},

{

"role": "user",

"content": "Can you please summarize the following: \"{{session.conversationString.output}}\""

}

]

}

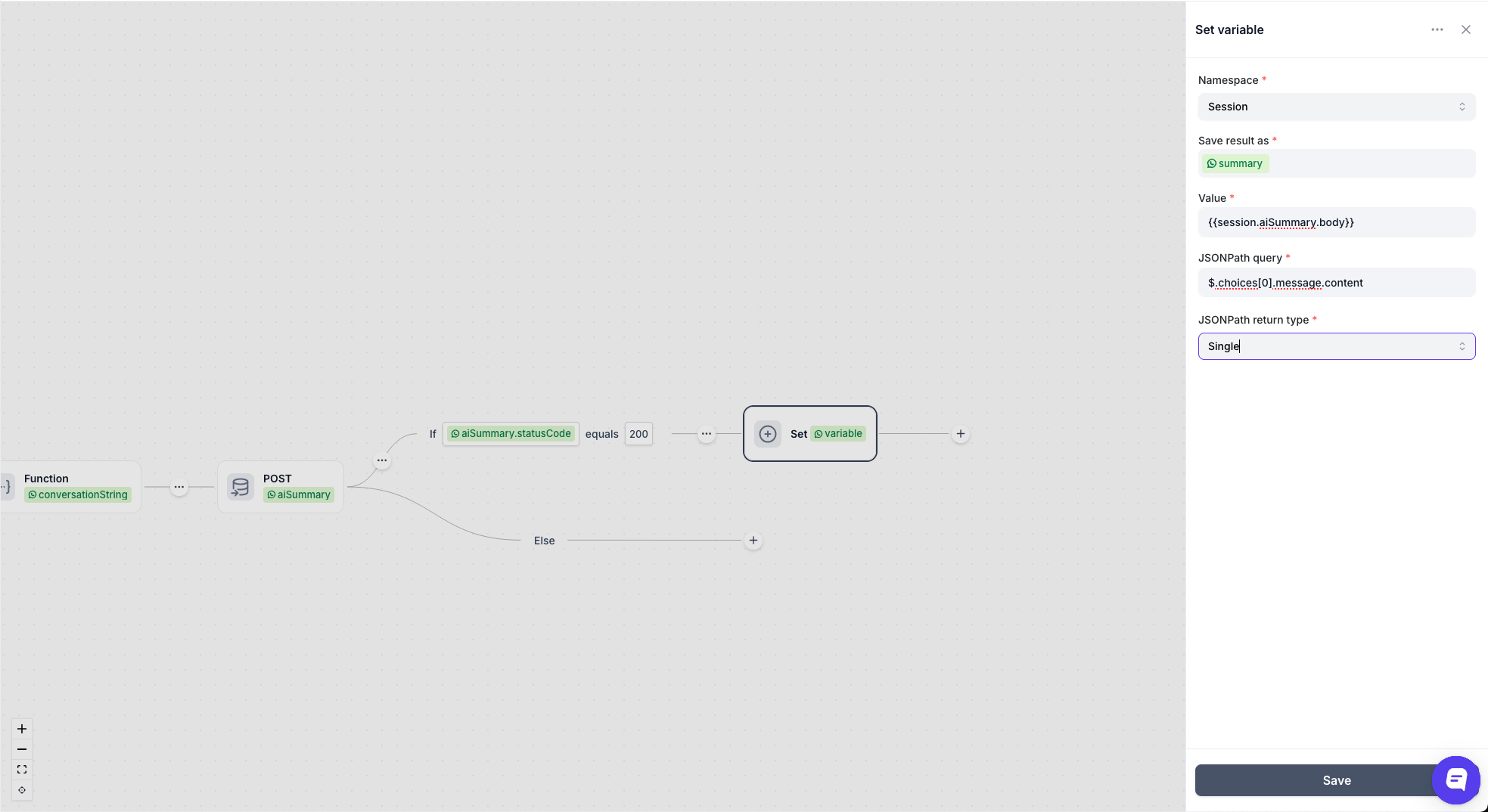

- Add a Set node.

- Save result as:

summary - Value:

{{session.aiSummary.body}} - JSON Path Query:

$.choices[0].message.content - JSON Return Type:

single

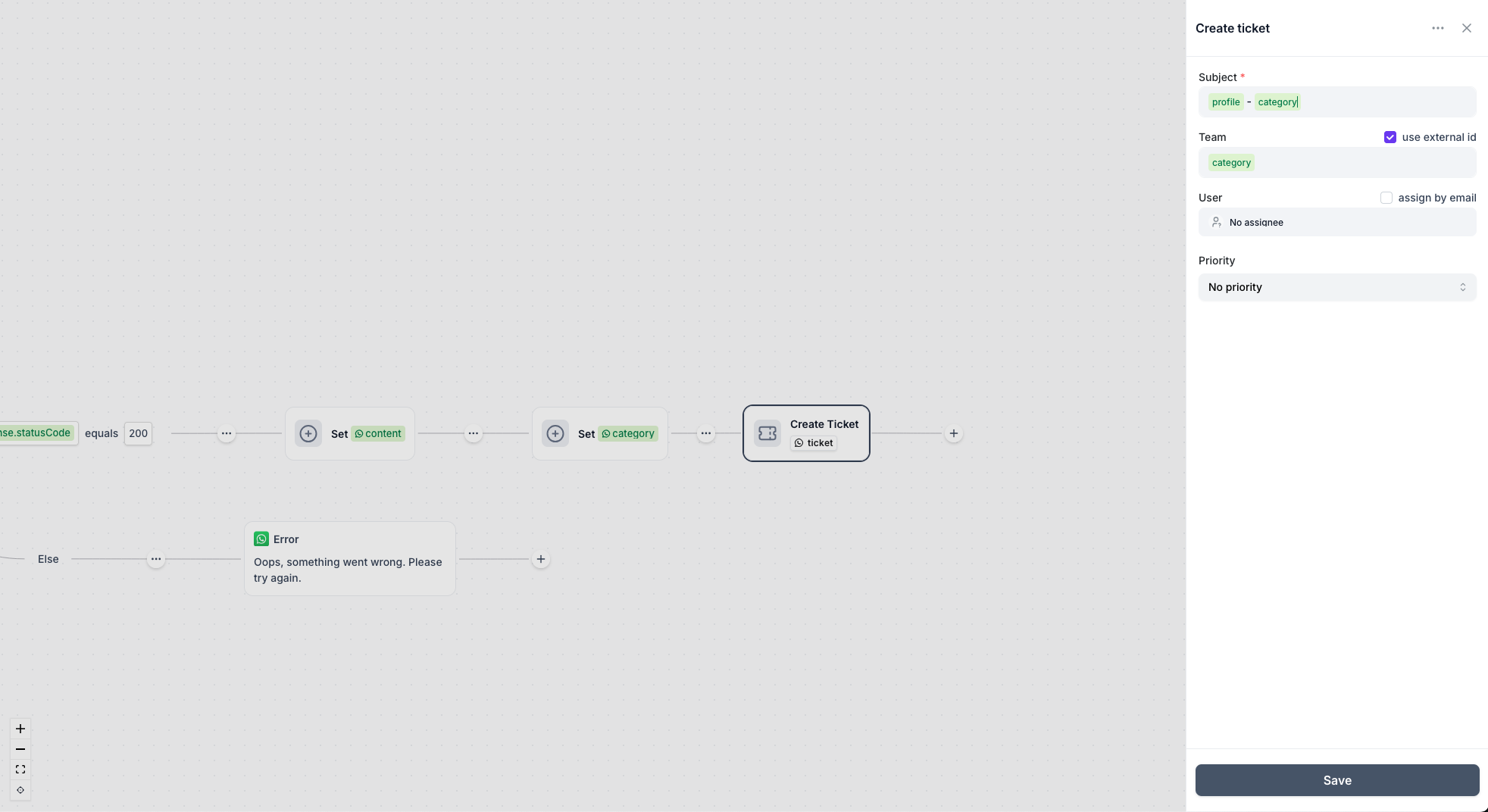

Push to External API

- Add another HTTP Request node:

- URL:

your-crm.example.com/api/tickets - Method:

POST - Body:

{

"summary": "{{session.summary}}"

}- Error Handling

- In your HTTP node, check

{{session.response.statusCode}} == 200 - On success → parse and continue

- On failure → set a fallback message or skip summarization

- Best Practices

- Keep your system prompt as concise as possible

- Avoid sending sensitive PII—mask or truncate as needed

Function Calling Integration

Function calling allows OpenAI to determine when to call specific functions based on the conversation context. This is ideal for categorizing queries.

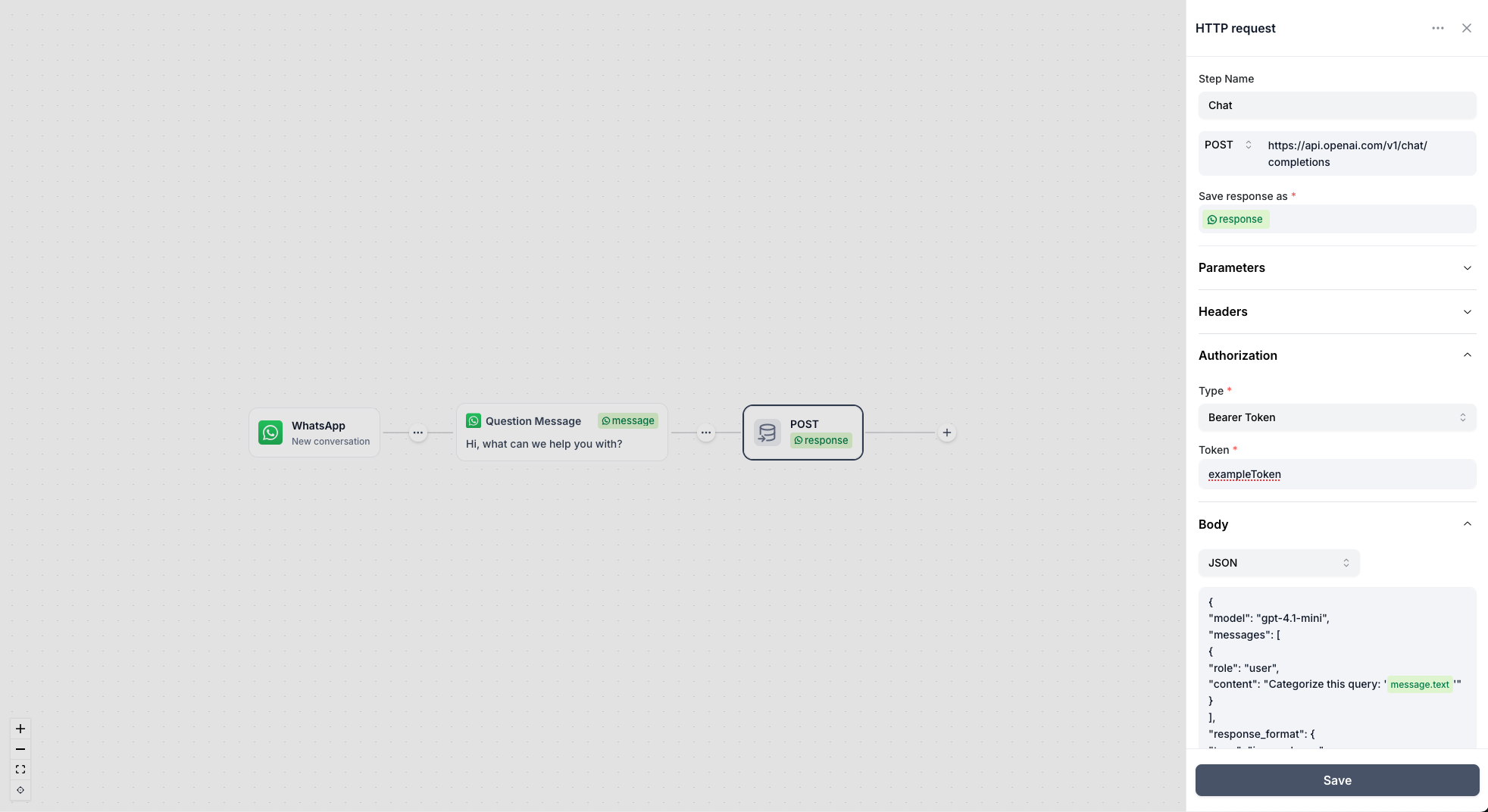

Basic Setup

- Open your flow in the Cue Flow Builder

- Add an HTTP request node where you want the AI interaction to occur

- Configure the HTTP request with the following settings:

- URL:

https://api.openai.com/v1/chat/completions - Method:

POST - Authorization:

Bearer Token - Token:

ExampleToken - Body:

{

"model": "gpt-4.1-mini",

"messages": [

{

"role": "user",

"content": "Categorize this query: '{{session.message.text}}'"

}

],

"response_format": {

"type": "json_schema",

"json_schema": {

"name": "query_categorization",

"strict": true,

"schema": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The original user query"

},

"category": {

"type": "string",

"enum": ["billing", "support", "general"],

"description": "The most appropriate category"

},

"confidence": {

"type": "number",

"minimum": 0,

"maximum": 1,

"description": "Confidence score for the categorization"

}

},

"required": ["query", "category", "confidence"],

"additionalProperties": false

}

}

}

}The response from OpenAI:

{

"id": "chatcmpl-RandomId",

"object": "chat.completion",

"created": 1748503764,

"model": "gpt-4.1-mini-2025-04-14",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "{\"query\":\"I have an issue with my account, for some reason the payment is not going through.?\",\"category\":\"billing\",\"confidence\":0.95}",

"refusal": null,

"annotations": []

},

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 112,

"completion_tokens": 32,

"total_tokens": 144,

"prompt_tokens_details": {

"cached_tokens": 0,

"audio_tokens": 0

},

"completion_tokens_details": {

"reasoning_tokens": 0,

"audio_tokens": 0,

"accepted_prediction_tokens": 0,

"rejected_prediction_tokens": 0

}

},

"service_tier": "default",

"system_fingerprint": "fp_randomId"

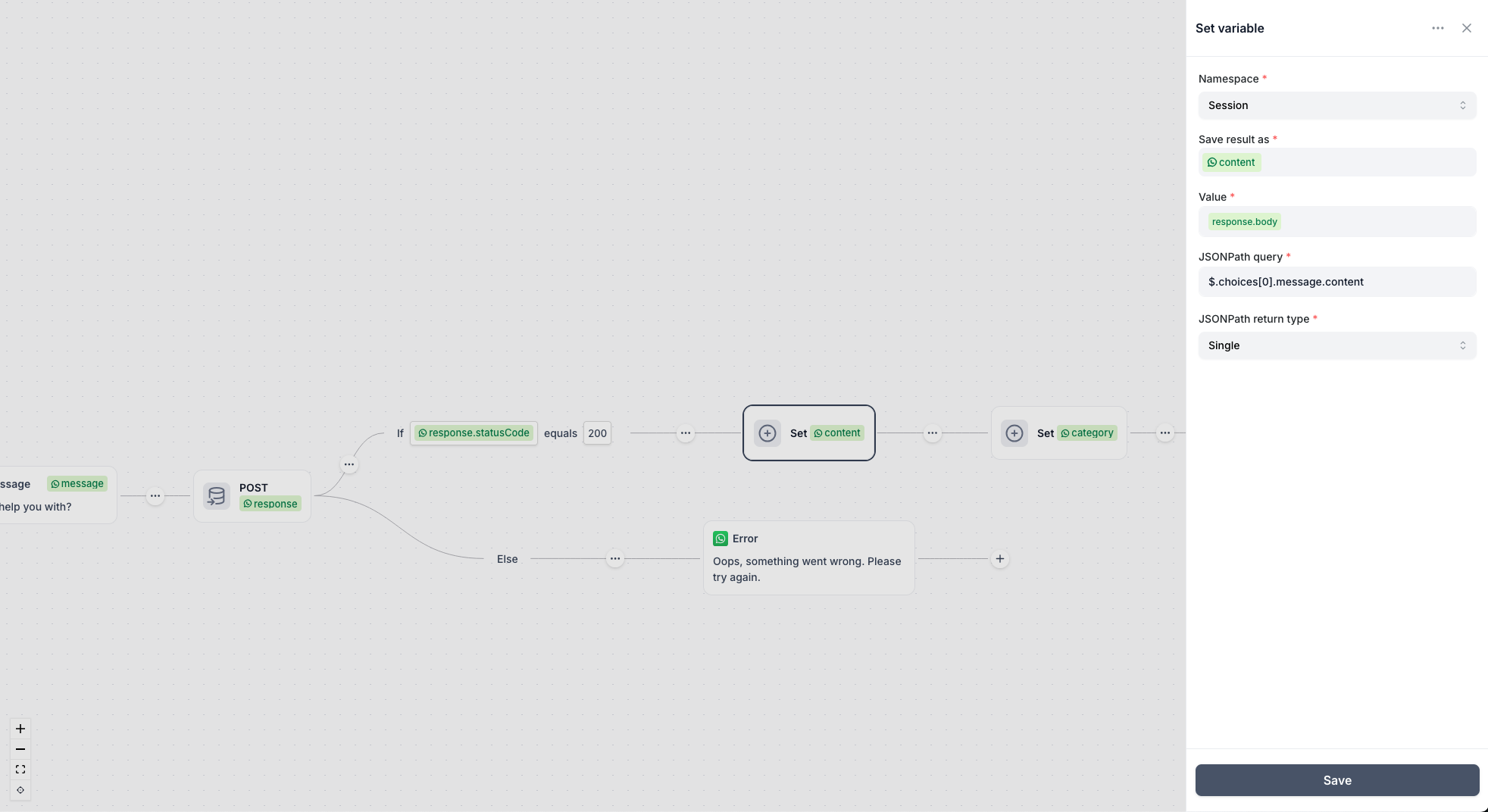

}Extract the content from the response message using the Cue Set node. I used {{session.response.statusCode}} = 200 If statement for error management.

- Set node to extract message content.

$.choices[0].message.content

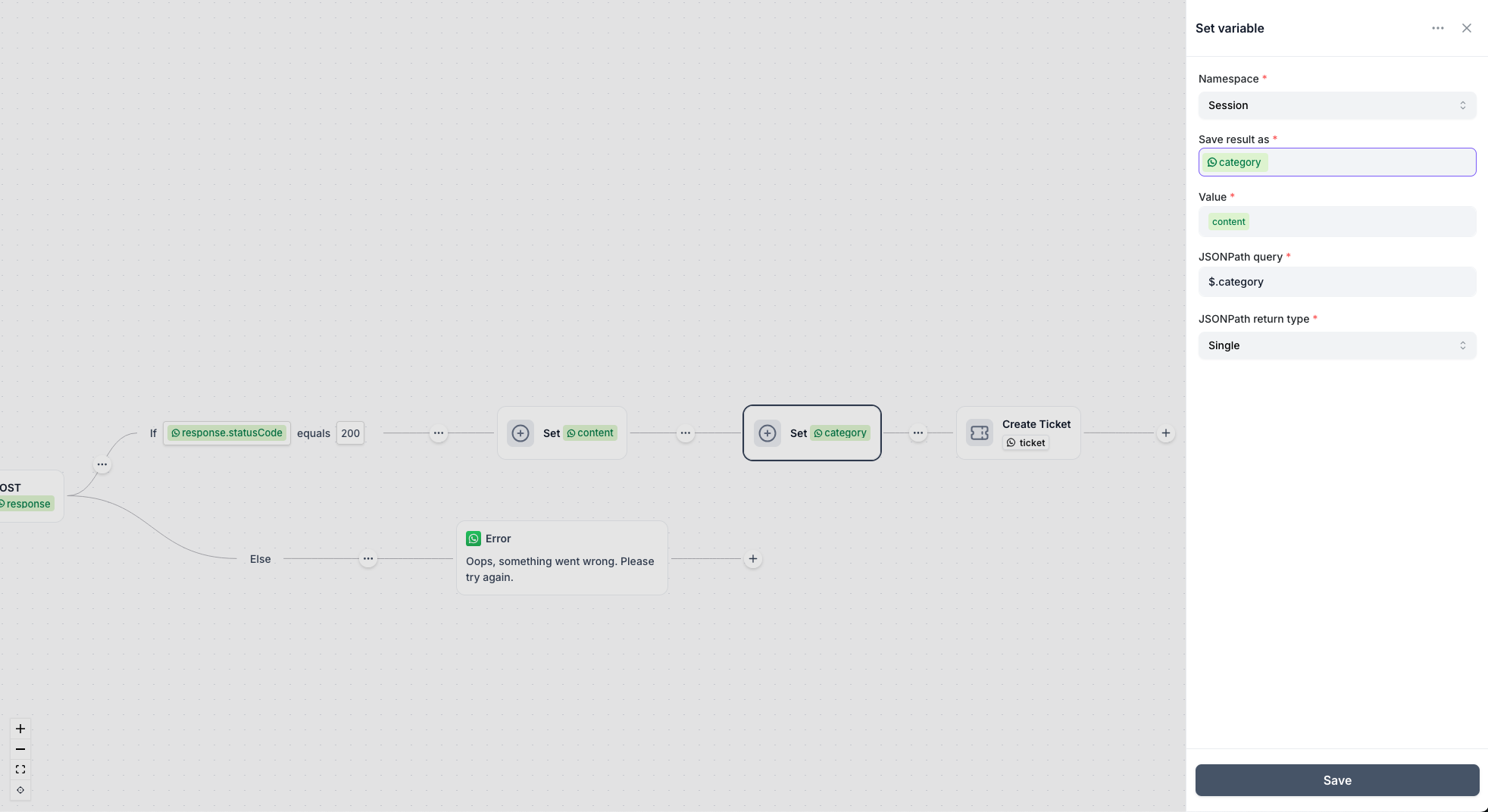

2. Set node to extract category from the content. $.category

3. Dynamically assigning to Team based on Category.